Tech post: Tracking hit size in GTM with the GA Tasks API

Did you know that GA has a hit size limit? It's true. The limit is just over 8kb, and a standard pageview hit (containing a handful of custom dimensions) from my blog comes in at around 500 bytes - 1/16th of the max size. Realistically, the vast majority of hits sent to GA don't come anywhere near the limit. But rarely - if you're tracking many dimensions/metrics/attributes at a time, as my large-enterprise clients do - hits may cross the threshold.What happens if a hit exceeds this maximum size? It’s completely dropped from GA before it’s ever processed, without any indication that a hit was sent-but-not-recieved. It reminds me of this quote from the poetry of Donald Rumsfeld:

As we know,There are known knowns.There are things we know we know.We also knowThere are known unknowns.That is to sayWe know there are some thingsWe do not know.But there are also unknown unknowns,The ones we don't knowWe don't know.—Donald Rumsfeld, Feb. 12, 2002, Department of Defense news briefing

When working with those mega-dimension setups, one starts to wonder: what don't we know about? Are we missing anything?

Let's find out!

My simplest thought on this was: how can I find out how big my historical hits are, to see if this is even really an issue? I know I wouldn't see any too-big hits (since they aren't even processed) in historical data, but if there was a large number of almost-too-big hits, that would be a decent indicator that some were crossing the threshold. The short answer is: there's nothing in GA's UI that can give us that information.At the other end of the complexity spectrum, I thought I could find a way to detect hit size in GTM, and then replace too-big hits with smaller versions of themselves. That idea seemed potentially risky, and would require a fair amount of review to determine what should be kept, and how to limit the rest. If I went through all of that work and testing, and then found out that there aren't any too-big hits, it would be a terrible return on my time investment.The middle way I settled on implementing (for now) is this: find a way to detect hit size in GTM, and record this in a custom dimension. Let that run for awhile, examine the data, and then see if I need to pursue this further.

...But how?

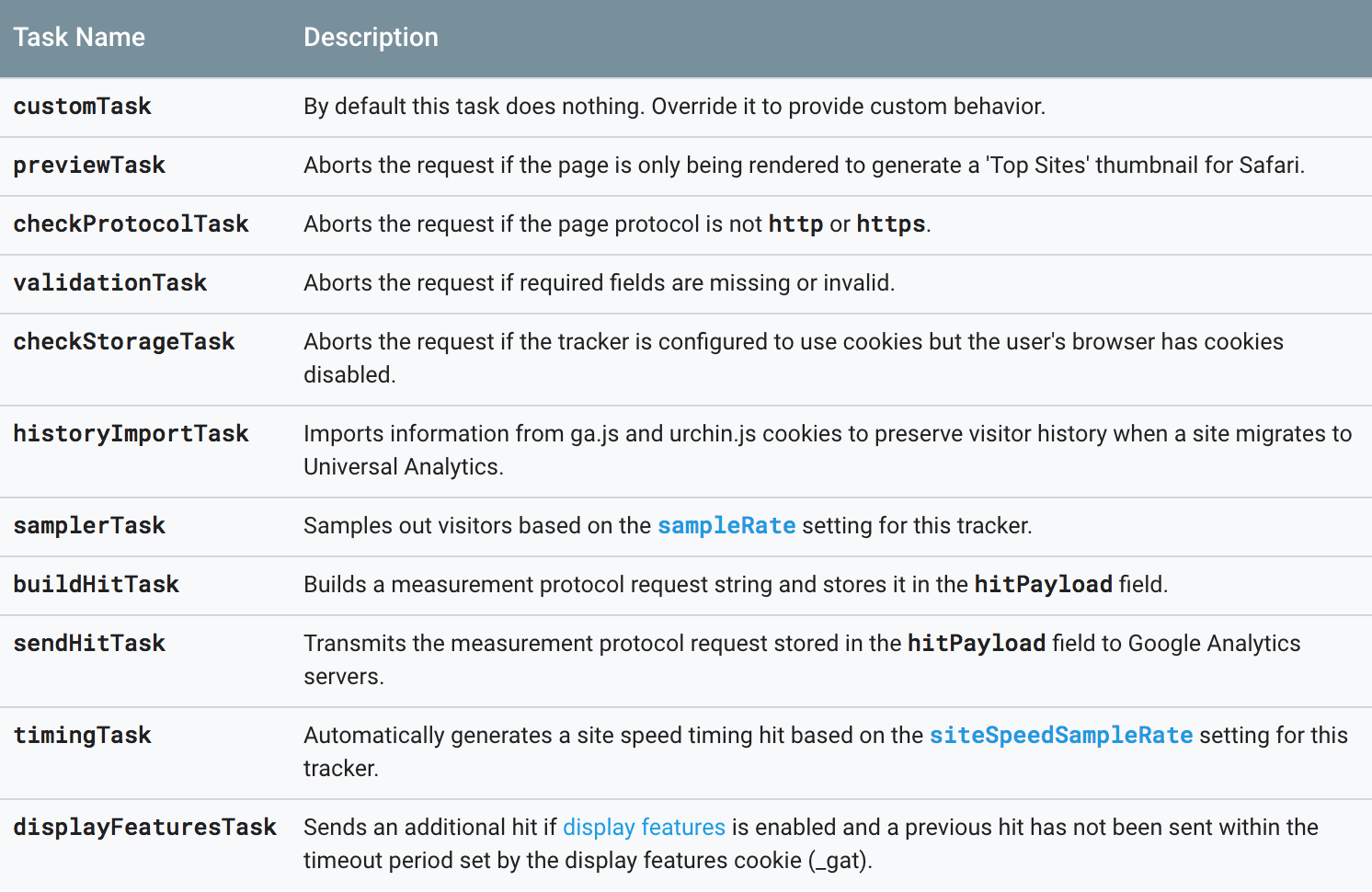

Step 1 - Finding the hit size - proved to be easier said than done. I tried various not-GA-specific things like listening for all hits and attempting to process them, but it just wasn’t workable or scalable. And then I remembered Simo Ahava’s post about using customTask to track the GA clientId into a custom dimension, and that led me to the GA Tasks API, which provides an interface to the inner workings of GA:

Use customTask to override buildHitTask

buildHitTask is what we’re looking for. After the default buildHitTask executes, a model field called ‘hitPayload’ contains the exact string that will be transmitted to GA. We can override the buildHitTask task to:

- Build the hit (using the default method)

- Measure the size of the hit

- Add the hit size as a custom dimension value

- Rebuild the hit, so the hitPayload includes the new custom dimension

The hitPayload field is already URL-encoded into ASCII, and ASCII characters are one byte each; so the hitPayload size is equal to the length of the string. With this simple logic, the data is slightly incorrect; the size we’re recording doesn’t include the size of the transmission of the size. At any rate, it’s good enough for now; the hit size will increase by 10b at most when this extra dimension is added.Cobbling together Simo’s code with Google’s example of how to add to a task, I came up with this:

function() {// Modify to match the index numbers to send the data tovar sizeDimensionIndex = 3;return function(model) {// do any customTask things here, like add the clientId a la Simo Ahava// prep for buildHitTask update:// get the default buildHitTask from modelvar defaultBuildHitTask = model.get('buildHitTask');// override, & call default in the overridemodel.set('buildHitTask', function(model) {// build the hit, so we can get the sizedefaultBuildHitTask(model);// get the payload size & add it to the model if it existstry {var payload = model.get('hitPayload');// URLs are ASCII, which is 1 byte per char; payload is already URL-encodedmodel.set('dimension' + sizeDimensionIndex, payload.length);// at this point, if hit is too big, could error out and// push event to the dataLayer to send a "hit too big" event// re-build the hitdefaultBuildHitTask(model);} catch(e) {// error handling if needed// console.log("error on payload");// console.log(e);}});}}

Here’s what you’ll need to put this all into practice in GTM/GA:

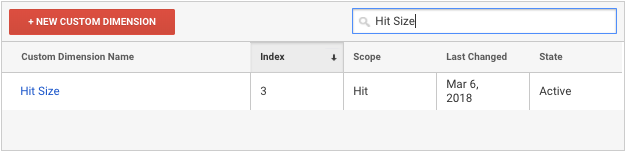

- In GA: Create a hit-scoped Custom Dimension called “Hit Size”. Let’s assume it’s dimension 3.

- In GTM:

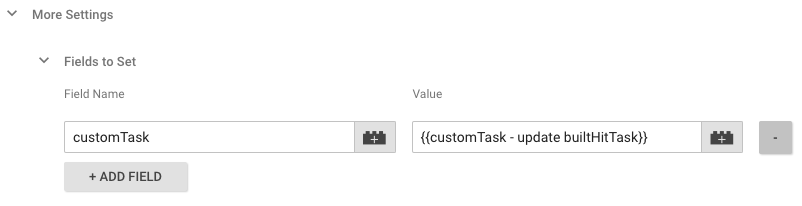

- Create a new Custom Javascript variable called “customTask - update builtHitTask”. Paste the above code into that variable.

- Add a custom field to your tag (or ideally, GA Settings variable), and assign “customTask - update buidlHitTask” to it like so:

- Publish and collect data, and then:

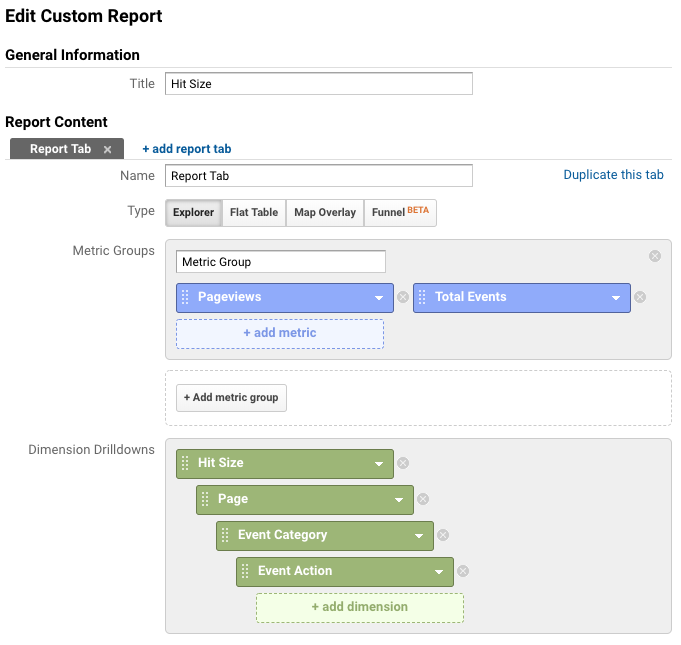

- Back in GA, use this custom report link to see data. Here’s the configuration:

Sort the results in descending order by Hit Size, and voila - you'll quickly have an indication of whether your hits are anywhere near the max hit size. You can also drill into the Page/Event Category/Event Action values (because, of course you'll be curious where those hits come from).

Sort the results in descending order by Hit Size, and voila - you'll quickly have an indication of whether your hits are anywhere near the max hit size. You can also drill into the Page/Event Category/Event Action values (because, of course you'll be curious where those hits come from).

In my next technical post, we’ll examine how to handle hits that may be too big, by triggering limited events (smaller versions of the too-big hits) directly from the customTask. Stay tuned...Side note: Dan Wilkerson at LunaMetrics wrote a very useful post about too-big-hits for eCommerce data, which was also helpful and is definitely worth a read (but solves a different problem).